Physical Science Guided Reading and Study Workbook Chapter 20

A paper'due south "Methods" (or "Materials and Methods") department provides information on the study'south design and participants. Ideally, information technology should be so clear and detailed that other researchers tin repeat the study without needing to contact the authors. You will demand to examine this section to make up one's mind the study's strengths and limitations, which both affect how the report'southward results should be interpreted.

Demographics

The "Methods" section usually starts by providing information on the participants, such as historic period, sex, lifestyle, health status, and method of recruitment. This data will aid yous decide how relevant the study is to you, your loved ones, or your clients.

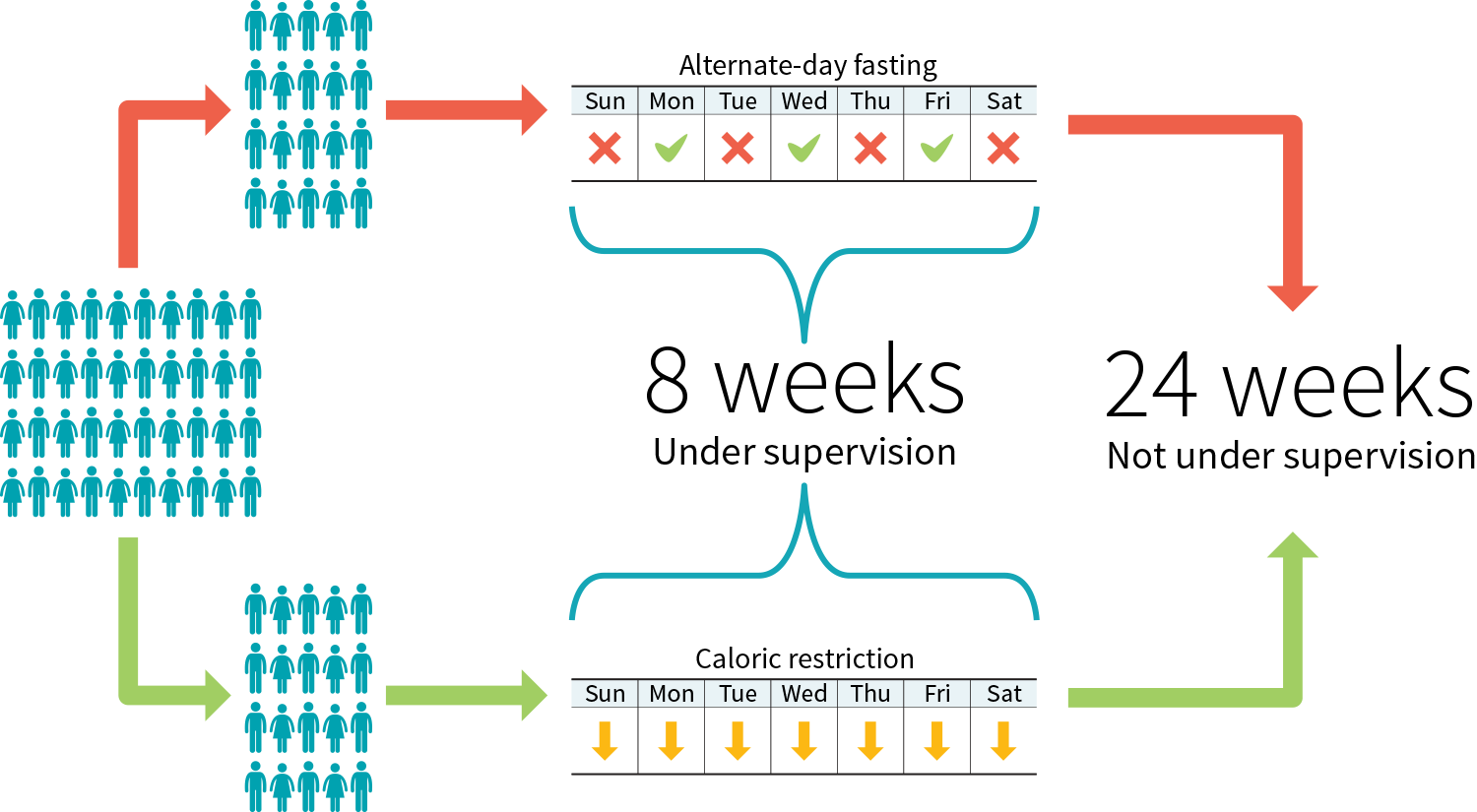

Figure 3: Example study protocol to compare 2 diets

The demographic information tin can be lengthy, you might be tempted to skip information technology, yet information technology affects both the reliability of the study and its applicability.

Reliability. The larger the sample size of a study (i.east., the more participants it has), the more reliable its results. Note that a study frequently starts with more participants than information technology ends with; nutrition studies, notably, ordinarily see a fair number of dropouts.

Applicability. In health and fettle, applicability ways that a compound or intervention (i.east., do, nutrition, supplement) that is useful for ane person may be a waste matter of money — or worse, a danger — for another. For instance, while creatine is widely recognized as safety and effective, at that place are "nonresponders" for whom this supplement fails to improve practise performance.

Your mileage may vary, equally the creatine example shows, however a study's demographic information can assistance y'all appraise this study's applicability. If a trial only recruited men, for instance, women reading the written report should keep in listen that its results may be less applicable to them. Likewise, an intervention tested in college students may yield different results when performed on people from a retirement facility.

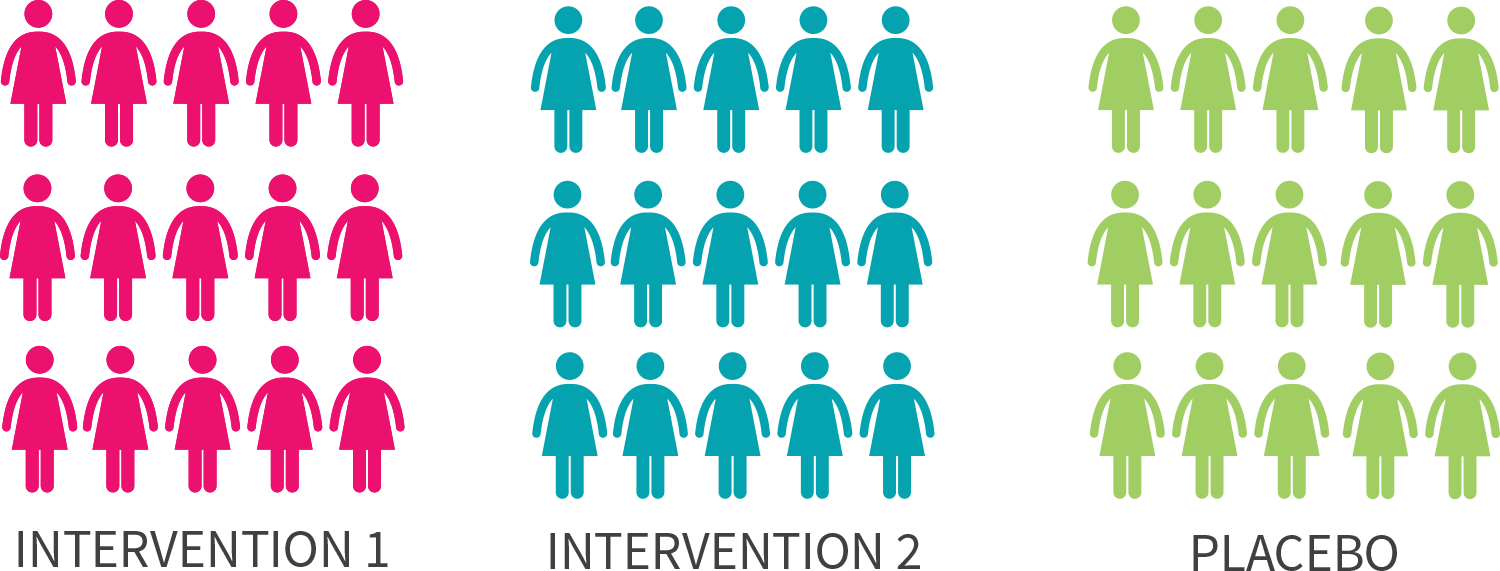

Effigy 4: Some trials are sexual practice-specific

Furthermore, different recruiting methods will attract different demographics, and so can influence the applicability of a trial. In about scenarios, trialists will employ some form of "convenience sampling". For instance, studies run by universities volition frequently recruit amid their students. However, some trialists will utilise "random sampling" to make their trial's results more than applicable to the full general population. Such trials are generally called "augmented randomized controlled trials".

Confounders

Finally, the demographic information will commonly mention if people were excluded from the report, and if so, for what reason. Most often, the reason is the existence of a confounder — a variable that would confound (i.due east., influence) the results.

For case, if you study the result of a resistance training programme on muscle mass, you don't desire some of the participants to take musculus-building supplements while others don't. Either yous'll desire all of them to take the same supplements or, more likely, you'll want none of them to take any.

Likewise, if you written report the effect of a muscle-edifice supplement on muscle mass, you don't desire some of the participants to exercise while others practise not. You'll either desire all of them to follow the same workout programme or, less likely, you'll want none of them to exercise.

It is of course possible for studies to accept more than two groups. You could have, for instance, a study on the effect of a resistance preparation program with the following four groups:

-

Resistance training program + no supplement

-

Resistance training program + creatine

-

No resistance training + no supplement

-

No resistance training + creatine

Just if your report has iv groups instead of two, for each grouping to continue the same sample size you demand twice every bit many participants — which makes your written report more difficult and expensive to run.

When you come right downwards to information technology, whatever differences between the participants are variable and thus potential confounders. That'southward why trials in mice use specimens that are genetically very close to one some other. That's too why trials in humans seldom attempt to test an intervention on a various sample of people. A trial restricted to older women, for instance, has in event eliminated historic period and sex as confounders.

As we saw above, with a great enough sample size, we can have more than groups. We tin even create more groups afterwards the written report has run its course, by performing a subgroup analysis. For instance, if y'all run an observational report on the event of red meat on thousands of people, you lot can later split up the data for "male" from the data for "female" and run a separate analysis on each subset of data. Yet, subgroup analyses of these sorts are considered exploratory rather than confirmatory and could potentially pb to false positives. (When, for example, a claret test erroneously detects a disease, information technology is called a false positive.)

Pattern and endpoints

The "Methods" section will also draw how the study was run. Pattern variants include single-bullheaded trials, in which merely the participants don't know if they're receiving a placebo; observational studies, in which researchers simply observe a demographic and accept measurements; and many more than. (See figure two to a higher place for more examples.)

More specifically, this is where you lot will learn well-nigh the length of the study, the dosages used, the workout regimen, the testing methods, and so on. Ideally, as we said, this data should be and so articulate and detailed that other researchers tin echo the study without needing to contact the authors.

Finally, the "Methods" section can also brand clear the endpoints the researchers will exist looking at. For case, a study on the effects of a resistance training program could use musculus mass equally its master endpoint (its main criterion to guess the consequence of the report) and fat mass, force performance, and testosterone levels as secondary endpoints.

Ane trick of studies that want to find an effect (sometimes then that they tin serve every bit marketing material for a product, just often only because studies that bear witness an effect are more likely to get published) is to collect many endpoints, so to make the paper nearly the endpoints that showed an result, either by downplaying the other endpoints or by not mentioning them at all. To prevent such "data dredging/fishing" (a method whose stray efficacy was demonstrated through the hilarious chocolate hoax), many scientists button for the preregistration of studies.

Sniffing out the tricks used by the less scrupulous authors is, alas, part of the skills you lot'll need to develop to assess published studies.

Interpreting the statistics

The "Methods" section usually concludes with a hearty statistics discussion. Determining whether an advisable statistical analysis was used for a given trial is an entire field of study, and then we suggest yous don't sweat the details; try to focus on the large motion picture.

First, let's articulate up two common misunderstandings. You lot may take read that an effect was significant, only to later discover that it was very small. Similarly, you may accept read that no effect was constitute, all the same when you read the paper you found that the intervention grouping had lost more weight than the placebo group. What gives?

The trouble is unproblematic: those quirky scientists don't speak like normal people do.

For scientists, meaning doesn't mean important — it means statistically pregnant. An event is significant if the information collected over the course of the trial would be unlikely if there really was no issue.

Therefore, an effect tin exist significant still very small — 0.2 kg (0.5 lb) of weight loss over a year, for instance. More to the indicate, an effect tin can be significant still not clinically relevant (meaning that it has no discernible effect on your health).

Relatedly, for scientists, no effect usually means no statistically significant consequence. That'due south why you may review the measurements collected over the course of a trial and notice an increase or a decrease yet read in the decision that no changes (or no effects) were found. At that place were changes, but they weren't significant. In other words, in that location were changes, simply so small that they may be due to random fluctuations (they may also be due to an actual event; we can't know for certain).

We saw earlier, in the "Demographics" section, that the larger the sample size of a study, the more reliable its results. Relatedly, the larger the sample size of a study, the greater its ability to notice if small-scale effects are significant. A small alter is less probable to exist due to random fluctuations when found in a report with a thousand people, permit'southward say, than in a study with 10 people.

This explains why a meta-analysis may find significant changes by pooling the data of several studies which, independently, institute no significant changes.

P-values 101

Virtually often, an effect is said to be pregnant if the statistical analysis (run past the researchers post-study) delivers a p-value that isn't higher than a certain threshold (set by the researchers pre-written report). We'll call this threshold the threshold of significance.

Understanding how to interpret p-values correctly can be catchy, fifty-fifty for specialists, merely here's an intuitive manner to recollect about them:

Remember about a coin toss. Flip a coin 100 times and you volition get roughly a l/50 split of heads and tails. Not terribly surprising. Just what if you lot flip this coin 100 times and go heads every time? Now that's surprising! For the record, the probability of it actually happening is 0.00000000000000000000000000008%.

You tin recollect of p-values in terms of getting all heads when flipping a coin.

-

A p-value of v% (p = 0.05) is no more surprising than getting all heads on 4 coin tosses.

-

A p-value of 0.v% (p = 0.005) is no more than surprising than getting all heads on viii coin tosses.

-

A p-value of 0.05% (p = 0.0005) is no more surprising than getting all heads on 11 coin tosses.

Contrary to pop conventionalities, the "p" in "p-value" does not stand for "probability". The probability of getting 4 heads in a row is six.25%, not 5%. If you want to catechumen a p-value into coin tosses (technically called S-values) and a probability percentage, check out the converter here.

As we saw, an effect is significant if the data collected over the class of the trial would be unlikely if at that place really was no consequence. Now we tin add that, the lower the p-value (under the threshold of significance), the more confident we can exist that an effect is pregnant.

P-values 201

All right. Off-white warning: we're going to get nerdy. Well, nerdier. Experience free to skip this section and resume reading hither.

Yet with us? All right, so — permit's become at it. As we've seen, researchers run statistical analyses on the results of their study (usually one analysis per endpoint) in order to determine whether or not the intervention had an effect. They commonly make this determination based on the p-value of the results, which tells y'all how likely a outcome at least as extreme as the one observed would be if the null hypothesis, amidst other assumptions, were truthful.

Ah, jargon! Don't panic, nosotros'll explicate and illustrate those concepts.

In every experiment in that location are by and large two opposing statements: the null hypothesis and the culling hypothesis. Let'south imagine a fictional written report testing the weight-loss supplement "Better Weight" against a placebo. The two opposing statements would look similar this:

-

Null hypothesis: compared to placebo, Better Weight does not increase or decrease weight. (The hypothesis is that the supplement's issue on weight is naught.)

-

Alternative hypothesis: compared to placebo, Better Weight does decrease or increment weight. (The hypothesis is that the supplement has an effect, positive or negative, on weight.)

The purpose is to see whether the upshot (here, on weight) of the intervention (here, a supplement chosen "Ameliorate Weight") is better, worse, or the same as the effect of the control (here, a placebo, but sometimes the control is another, well-studied intervention; for case, a new drug can exist studied against a reference drug).

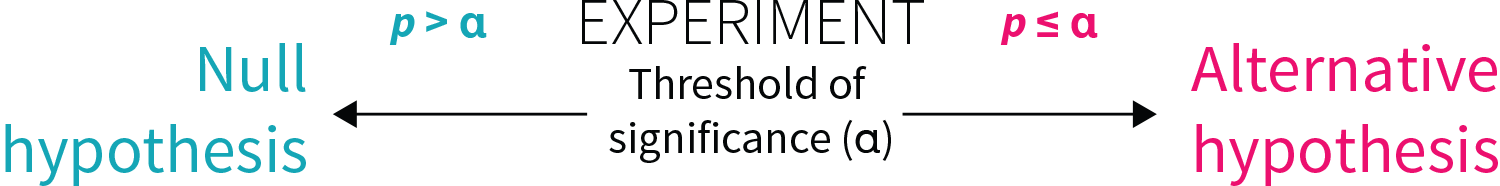

For that purpose, the researchers usually set up a threshold of significance (α) before the trial. If, at the end of the trial, the p-value (p) from the results is less than or equal to this threshold (p ≤ α), there is a meaning divergence between the furnishings of the two treatments studied. (Think that, in this context, meaning means statistically significant.)

Figure five: Threshold for statistical significance

The most ordinarily used threshold of significance is five% (α = 0.05). It means that if the zip hypothesis (i.eastward., the idea that there was no difference between treatments) is true, so, after repeating the experiment an infinite number of times, the researchers would get a simulated positive (i.e., would detect a significant effect where there is none) at about 5% of the time (p ≤ 0.05).

Generally, the p-value is a measure of consistency betwixt the results of the study and the thought that the two treatments have the aforementioned effect. Let'due south see how this would play out in our Improve Weight weight-loss trial, where one of the treatments is a supplement and the other a placebo:

-

Scenario i: The p-value is 0.80 (p = 0.80). The results are more than consequent with the null hypothesis (i.e., the idea that there is no difference betwixt the 2 treatments). We conclude that Better Weight had no significant result on weight loss compared to placebo.

-

Scenario two: The p-value is 0.01 (p = 0.01). The results are more than consistent with the alternative hypothesis (i.e., the idea that there is a difference between the two treatments). We conclude that Ameliorate Weight had a significant effect on weight loss compared to placebo.

While p = 0.01 is a significant result, so is p = 0.000001. So what information do smaller p-values offer united states of america? All other things beingness equal, they requite u.s.a. greater confidence in the findings. In our instance, a p-value of 0.000001 would requite us greater confidence that Better Weight had a significant result on weight change. Simply sometimes things aren't equal betwixt the experiments, making straight comparing between 2 experiment's p-values catchy and sometimes downright invalid.

Fifty-fifty if a p-value is significant, recollect that a significant effect may not be clinically relevant. Let'southward say that we institute a significant consequence of p = 0.01 showing that Better Weight improves weight loss. The take hold of: Amend Weight produced only 0.two kg (0.5 lb) more weight loss compared to placebo afterward one year — a difference also pocket-size to have any meaningful effect on health. In this case, though the event is pregnant, statistically, the existent-world outcome is too pocket-sized to justify taking this supplement. (This type of scenario is more likely to take place when the study is big since, as we saw, the larger the sample size of a written report, the greater its power to find if small effects are significant.)

Finally, nosotros should mention that, though the virtually unremarkably used threshold of significance is v% (p ≤ 0.05), some studies crave greater certainty. For instance, for genetic epidemiologists to declare that a genetic association is statistically significant (say, to declare that a cistron is associated with weight gain), the threshold of significance is commonly set up at 0.0000005% (p ≤ 0.000000005), which corresponds to getting all heads on 28 coin tosses. The probability of this happening is 0.00000003%.

P-values: Don't worship them!

Finally, go along in mind that, while important, p-values aren't the final say on whether a written report's conclusions are accurate.

Nosotros saw that researchers as well eager to discover an effect in their written report may resort to "data fishing". They may also effort to lower p-values in various means: for case, they may run different analyses on the same information and only report the significant p-values, or they may recruit more and more than participants until they go a statistically significant upshot. These bad scientific practices are known as "p-hacking" or "selective reporting". (Y'all can read about a real-life example of this here.)

While a study'southward statistical analysis usually accounts for the variables the researchers were trying to command for, p-values can also be influenced (on purpose or not) by written report design, hidden confounders, the types of statistical tests used, and much, much more. When evaluating the strength of a study'due south design, imagine yourself in the researcher's shoes and consider how you could torture a report to brand it say what you lot want and advance your career in the process.

hildebrandount1995.blogspot.com

Source: https://examine.com/guides/how-to-read-a-study/

0 Response to "Physical Science Guided Reading and Study Workbook Chapter 20"

Post a Comment